Principal Research Scientist at NVIDIA

Publications

Computer graphics research with a twist of neutron transport

BOOK

A Hitchhiker’s Guide to Multiple

Scattering

Exact Analytic, Monte Carlo and Approximate Solutions in Transport Theory

Eugene d’Eon

[free pdf]

Exact Analytic, Monte Carlo and Approximate Solutions in Transport Theory

Eugene d’Eon

[free pdf]

PAPERS

2025

Practical Gaussian process implicit surfaces with sparse convolutions

Kehan Xu | Benedikt Bitterli | Eugene d’Eon | Wojciech Jarosz

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia), 44(6), December 2025

[pdf]

Kehan Xu | Benedikt Bitterli | Eugene d’Eon | Wojciech Jarosz

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia), 44(6), December 2025

[pdf]

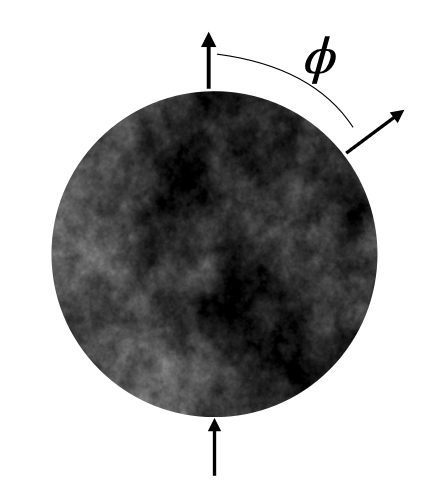

Harmonic caching for walk on spheres

Zihong Zhou | Eugene d’Eon | Rohan Sawhney | Wojciech Jarosz

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia), 44(6), December 2025

[pdf]

Zihong Zhou | Eugene d’Eon | Rohan Sawhney | Wojciech Jarosz

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia), 44(6), December 2025

[pdf]

High-Precision Benchmarks for the Stochastic Rod

Eugene d'Eon | Anil Prinja

Nuclear Science and Engineering

[pdf]

Eugene d'Eon | Anil Prinja

Nuclear Science and Engineering

[pdf]

2024

Appearance Modeling of Iridescent Feathers with Diverse Nanostructures

Yunchen Yu | Andrea Weidlich | Bruce Walter | Eugene d'Eon | Steve Marschner

SIGGRAPH Asia 2024

[pdf]

Yunchen Yu | Andrea Weidlich | Bruce Walter | Eugene d'Eon | Steve Marschner

SIGGRAPH Asia 2024

[pdf]

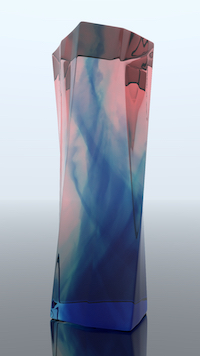

Reconstructing Translucent Thin Objects from Photos

Xi Deng | Lifan Wu | Bruce Walter | Eugene d'Eon | Ravi Ramamoorthi | Steve Marschner | Andrea Weidlich

SIGGRAPH Asia 2024

[pdf]

Xi Deng | Lifan Wu | Bruce Walter | Eugene d'Eon | Ravi Ramamoorthi | Steve Marschner | Andrea Weidlich

SIGGRAPH Asia 2024

[pdf]

A Generalized Ray Formulation For Wave-Optics Rendering

Shlomi Steinberg | Ravi Ramamoorthi | Benedikt Bitterli | Eugene d'Eon | Ling-Qi Yan | Matt Pharr

SIGGRAPH Asia 2024

[pdf]

Shlomi Steinberg | Ravi Ramamoorthi | Benedikt Bitterli | Eugene d'Eon | Ling-Qi Yan | Matt Pharr

SIGGRAPH Asia 2024

[pdf]

A Free-Space Diffraction BSDF

Shlomi Steinberg | Ravi Ramamoorthi | Benedikt Bitterli | Arshiya Mollazainali | Eugene d'Eon | Matt Pharr

SIGGRAPH 2024

[pdf]

Shlomi Steinberg | Ravi Ramamoorthi | Benedikt Bitterli | Arshiya Mollazainali | Eugene d'Eon | Matt Pharr

SIGGRAPH 2024

[pdf]

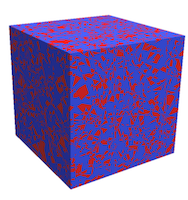

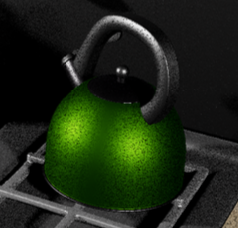

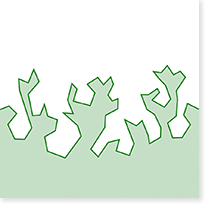

From microfacets to participating media: A unified theory of light transport with stochastic geometry

Dario Seyb | Eugene d’Eon | Benedikt Bitterli | Wojciech Jarosz

SIGGRAPH 2024

[pdf]

Dario Seyb | Eugene d’Eon | Benedikt Bitterli | Wojciech Jarosz

SIGGRAPH 2024

[pdf]

VMF Diffuse: A Unified Rough Diffuse BRDF

Eugene d’Eon | Andrea Weidlich

EGSR 2024

[pdf] [Mathematica] [ShaderToy]

Eugene d’Eon | Andrea Weidlich

EGSR 2024

[pdf] [Mathematica] [ShaderToy]

2023

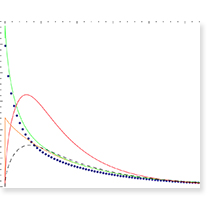

An Approximate Mie Scattering Function for Fog and Cloud Rendering

Johannes Jendersie | Eugene d’Eon

SIGGRAPH 2023 Talks

[pdf]

Johannes Jendersie | Eugene d’Eon

SIGGRAPH 2023 Talks

[pdf]

Student-T and Beyond: Practical Tools for Multiple-Scattering BSDFs with General NDFs

Eugene d’Eon

SIGGRAPH 2023 Talks

[pdf]

Eugene d’Eon

SIGGRAPH 2023 Talks

[pdf]

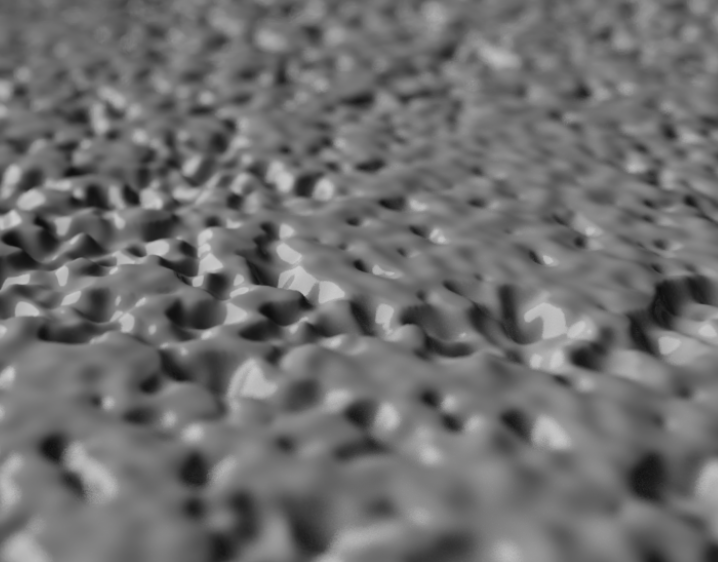

Microfacet Theory for Non-uniform Heightfields

Eugene d’Eon | Benedikt Bitterli | Andrea Weidlich | Tizian Zeltner

SIGGRAPH 2023 Papers

[pdf]

Eugene d’Eon | Benedikt Bitterli | Andrea Weidlich | Tizian Zeltner

SIGGRAPH 2023 Papers

[pdf]

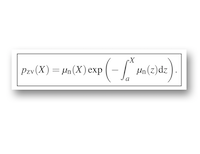

Beyond Renewal Approximations: A 1D Point Process Approach to Linear Transport in Stochastic Media

Eugene d’Eon

ANS M&C 2023

[pdf]

Eugene d’Eon

ANS M&C 2023

[pdf]

2022

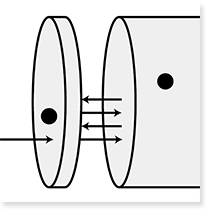

A Position-Free Path Integral for Homogeneous Slabs and Multiple Scattering on Smith Microfacets

Benedikt Bitterli | Eugene d’Eon

EGSR 2022

[pdf]

Benedikt Bitterli | Eugene d’Eon

EGSR 2022

[pdf]

2021

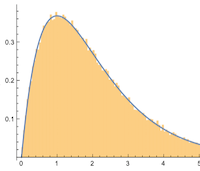

An unbiased ray-marching transmittance estimator

Markus Kettunen | Eugene d’Eon | Jacopo Pantaleoni | Jan Novak

SIGGRAPH 2021

[pdf]

Markus Kettunen | Eugene d’Eon | Jacopo Pantaleoni | Jan Novak

SIGGRAPH 2021

[pdf]

Markovian Binary Mixtures: Benchmarks for the albedo problem

Coline Larmier | Eugene d’Eon | Andrea Zoia

ANS M&C 2023

[pdf]

Coline Larmier | Eugene d’Eon | Andrea Zoia

ANS M&C 2023

[pdf]

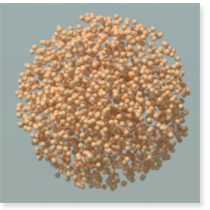

An analytic BRDF for materials with spherical Lambertian scatterers

Eugene d’Eon

EGSR 2021 (Best Paper Award)

[pdf] [video] [code]

Eugene d’Eon

EGSR 2021 (Best Paper Award)

[pdf] [video] [code]

2020

Zero-Variance Theory for Efficient Subsurface Scattering

Eugene d’Eon | Jaroslav Křivánek

SIGGRAPH 2020 Courses: Advances in Monte Carlo rendering: the legacy of Jaroslav Křivánek

[pdf] [video] [code]

Eugene d’Eon | Jaroslav Křivánek

SIGGRAPH 2020 Courses: Advances in Monte Carlo rendering: the legacy of Jaroslav Křivánek

[pdf] [video] [code]

Random variate generation/Importance sampling using integral transforms

Eugene d’Eon

Tech Report

[pdf] [code]

Eugene d’Eon

Tech Report

[pdf] [code]

2019

A Reciprocal Formulation of Nonexponential Radiative Transfer. 2: Monte Carlo Estimation and Diffusion Approximation

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

The Albedo Problem in Nonexponential Radiative Transfer

Eugene d’Eon

International Conference on Transport Theory (ICTT) – September, 2019, Paris

[pdf]

Eugene d’Eon

International Conference on Transport Theory (ICTT) – September, 2019, Paris

[pdf]

Nonexponential Radiative Transfer: Reciprocity, Monte Carlo Estimation and Diffusion Approximation

Eugene d’Eon

International Conference on Transport Theory (ICTT) – September, 2019, Paris

Eugene d’Eon

International Conference on Transport Theory (ICTT) – September, 2019, Paris

Radiative Transfer in half spaces of arbitrary dimension

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

2018

Isotropic Scattering in a Flatland Half-Space

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

A Reciprocal Formulation of Nonexponential Radiative Transfer. 1: Sketch and Motivation

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

Eugene d’Eon

The Journal of Computational and Theoretical Transport

[pdf]

2017

JPEG Pleno Database: 8i Voxelized Full Bodies (8iVFB v2) – A Dynamic Voxelized Point Cloud Dataset

Eugene d’Eon | Bob Harrison | Taos Myers | Philip A. Chou

ISO/IEC JTC1/SC29 Joint WG11/WG1 (MPEG/JPEG) input document WG11M40059/WG1M74006, Geneva, January 2017

[data]

Eugene d’Eon | Bob Harrison | Taos Myers | Philip A. Chou

ISO/IEC JTC1/SC29 Joint WG11/WG1 (MPEG/JPEG) input document WG11M40059/WG1M74006, Geneva, January 2017

[data]

2016

Additional Progress Towards the Unification of Microfacet and Microflake Theories

Jonathan Dupuy | Eric Heitz | Eugene d’Eon

EGSR 2016

[pdf]

Jonathan Dupuy | Eric Heitz | Eugene d’Eon

EGSR 2016

[pdf]

Diffusion approximations for nonclassical Boltzmann transport in arbitrary dimension

Eugene d’Eon

Tech Report

[pdf]

Eugene d’Eon

Tech Report

[pdf]

Multiple-Scattering Microfacet BSDFs with the Smith Model

Eric Heitz | Johannes Hanika | Eugene d’Eon | Carsten Dachsbacher

SIGGRAPH 2016

[pdf]

Eric Heitz | Johannes Hanika | Eugene d’Eon | Carsten Dachsbacher

SIGGRAPH 2016

[pdf]

2014

A Zero-variance-based Sampling Scheme for Monte Carlo Subsurface Scattering

Jaroslav Krivanek | Eugene d’Eon

ACM SIGGRAPH 2014 Talks

[pdf]

Jaroslav Krivanek | Eugene d’Eon

ACM SIGGRAPH 2014 Talks

[pdf]

Computer graphics and particle transport: our common heritage, recent cross-field parallels and the future of our rendering equation

Eugene d’Eon

Digipro 2014

Eugene d’Eon

Digipro 2014

A Fiber Scattering Model With Non-Separable Lobes

Eugene d’Eon | Steve Marschner | Johannes Hanika

ACM SIGGRAPH Talks 2014

[1-page abstract] [slides] [supplemental]

Eugene d’Eon | Steve Marschner | Johannes Hanika

ACM SIGGRAPH Talks 2014

[1-page abstract] [slides] [supplemental]

Importance Sampling Microfacet-Based BSDFs using the Distribution of Visible Normals

Eric Heitz | Eugene d’Eon

EGSR 2014 (Best Paper Award)

[pdfs]

Eric Heitz | Eugene d’Eon

EGSR 2014 (Best Paper Award)

[pdfs]

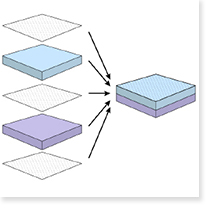

A Comprehensive Framework for Rendering Layered Materials

Wenzel Jakob | Eugene d’Eon | Otto Jakob | Steve Marschner

ACM SIGGRAPH 2014

[pdf]

Wenzel Jakob | Eugene d’Eon | Otto Jakob | Steve Marschner

ACM SIGGRAPH 2014

[pdf]

2013

Rigorous asymptotic and moment-preserving diffusion approximations for generalized linear Boltzmann transport in arbitrary dimension

Eugene d’Eon

Transport Theory and Statistical Physics 2013

[pdf]

Eugene d’Eon

Transport Theory and Statistical Physics 2013

[pdf]

Importance Sampling for Physically-based Hair Fiber Models

Eugene d’Eon | Steve Marschner | Johannes Hanika

ACM SIGGRAPH Asia 2013 Talks

[pdf]

Eugene d’Eon | Steve Marschner | Johannes Hanika

ACM SIGGRAPH Asia 2013 Talks

[pdf]

A Principle of Invariant Imbedding with Memory

Eugene d’Eon

International Conference on Transport Theory 23 (Sept 2013, Santa Fe, New Mexico)

[pdf]

Eugene d’Eon

International Conference on Transport Theory 23 (Sept 2013, Santa Fe, New Mexico)

[pdf]

2012

2011

A Quantized-Diffusion Model for Rendering Translucent Materials

Eugene d’Eon | Geoffrey Irving

ACM SIGGRAPH 2011

[pdf]

Eugene d’Eon | Geoffrey Irving

ACM SIGGRAPH 2011

[pdf]

An Energy-Conserving Hair Reflectance Model

Eugene d’Eon | Guillaume Francois | Martin Hill | Joe Letteri | Jean-Marie Aubry

Eurographics Symposium on Rendering 2011

[pdf]

Eugene d’Eon | Guillaume Francois | Martin Hill | Joe Letteri | Jean-Marie Aubry

Eurographics Symposium on Rendering 2011

[pdf]

2008

A Layered, Heterogeneous Reflectance Model for Acquiring and Rendering Human Skin

Craig Donner | Tim Weyrich | Eugene d’Eon | Ravi Ramamoorthi | Szymon Rusinkiewicz

ACM SIGGRAPH Asia 2008

[pdf]

Craig Donner | Tim Weyrich | Eugene d’Eon | Ravi Ramamoorthi | Szymon Rusinkiewicz

ACM SIGGRAPH Asia 2008

[pdf]

2007

GPU Gems 3

Chapter 14: Advanced Techniques for Realistic Real-Time Skin Rendering

Chapter 24: The Importance of Being Linear

Chapter 14: Advanced Techniques for Realistic Real-Time Skin Rendering

Chapter 24: The Importance of Being Linear